Expression Detection AI Concept

Leveraging facial expressions innovatively to drive customer engagement

AI

Published on: September 26, 2024

The Impulse

The growing interest in AI-powered emotion recognition stems from its potential to revolutionize human-computer interaction and enhance various industries by providing deeper insights into human behavior and emotional states. This technology promises to bridge the gap between cold, logical machines and the complex, emotional world of human beings.

The Challenge

Developing AI systems for emotion detection based on expressions faces several challenges:

Accuracy: Ensuring reliable detection across diverse facial features, expressions, and cultural contexts.

Privacy Concerns: Balancing the need for data with individuals' rights to privacy and consent.

Ethical Considerations: Addressing potential misuse and bias in emotion recognition systems.

Real-time Processing: Achieving low-latency analysis for live applications.

Contextual Understanding: Interpreting emotions within broader situational contexts.

Solution Approach

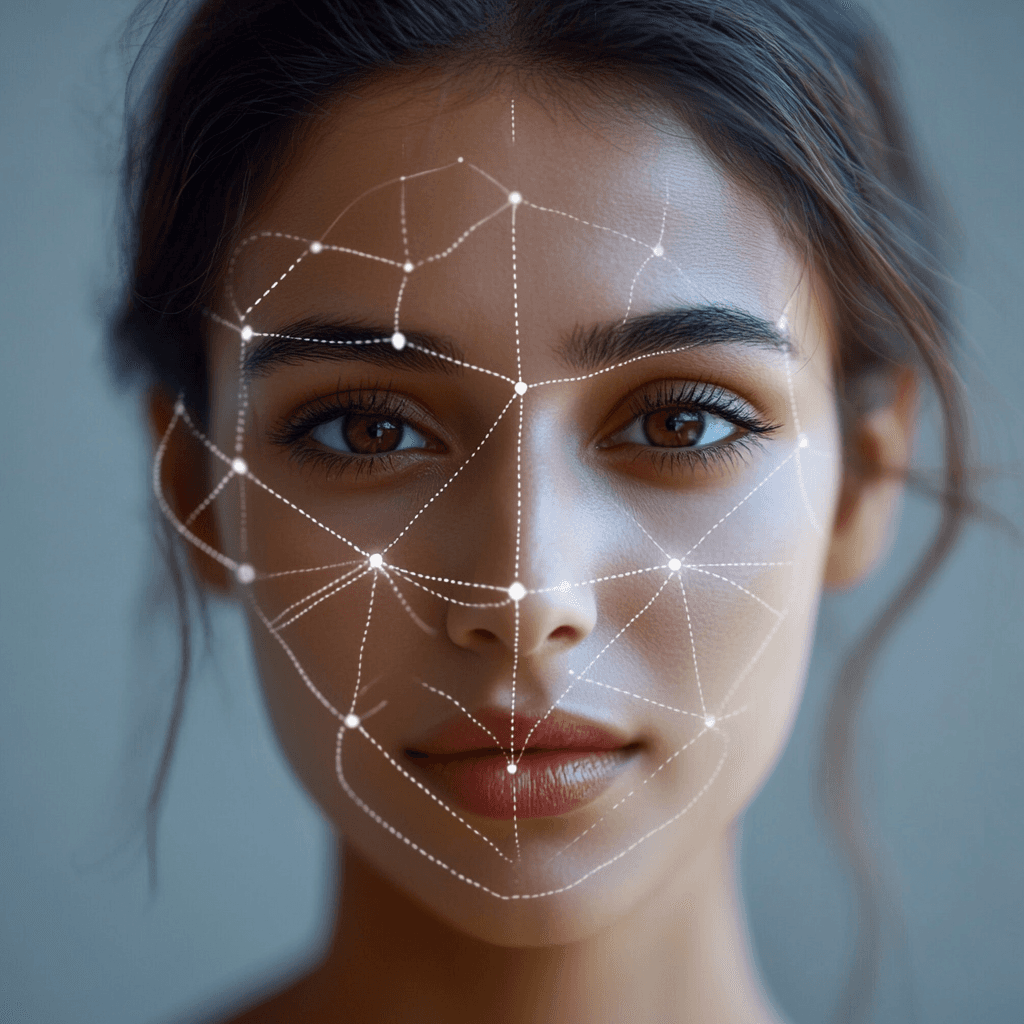

Several cutting-edge technologies are crucial for implementing emotion recognition systems:

Convolutional Neural Networks (CNNs): These deep learning models excel at image analysis and are fundamental to facial expression recognition.

Transfer Learning: Leveraging pre-trained models to improve accuracy and reduce training time.

Edge Computing: Enabling on-device processing for faster, more private emotion detection.

Computer Vision Libraries: Tools like OpenCV and dlib for image preprocessing and facial landmark detection.

Deep Learning Frameworks: TensorFlow, PyTorch, and Keras for building and training emotion recognition models.

To create an efficient, scalable, and flexible emotion recognition solution, consider the following:

OpenCV: An open-source computer vision library for image and video processing.

TensorFlow: A comprehensive machine learning platform for building and deploying models.

Keras: A high-level neural network API, running on top of TensorFlow.

ONNX: An open format for machine learning models, enabling interoperability between frameworks.

Docker: For containerization, ensuring consistent deployment across environments.

Kubernetes: For orchestrating containerized applications, enabling scalability.

Flask or FastAPI: Lightweight web frameworks for creating APIs around the emotion recognition model.

Redis: An in-memory data structure store, useful for caching to improve response times.

AWS SageMaker or Google Cloud AI Platform: Cloud platforms for training, deploying, and managing machine learning models at scale.

Grafana and Prometheus: For monitoring system performance and model accuracy in production.

The Results

By leveraging the above-mentioned technologies and tools, organizations can create robust AI-powered emotion recognition systems that not only provide valuable insights but also scale efficiently to meet growing demands. As this technology continues to evolve, we can expect to see more nuanced and context-aware applications that further enhance our understanding of human emotions and improve human-computer interactions across various domains.

Further Use Cases

Implementing AI-powered emotion recognition offers several advantages across various industries:

Customer Service

Real-time Feedback: Analyzing customer emotions during interactions to improve service quality.

Personalized Experiences: Tailoring responses based on detected emotional states.

Healthcare

Mental Health Monitoring: Assisting in the early detection of mood disorders.

Pain Assessment: Helping medical professionals gauge patient discomfort levels.

Education

Engagement Tracking: Monitoring student emotions to optimize learning experiences.

Adaptive Learning: Adjusting course difficulty based on learner frustration or boredom.

Marketing and Advertising

Ad Effectiveness: Measuring emotional responses to marketing campaigns.

Product Testing: Gauging consumer reactions to new products or designs.

Automotive Industry

Driver Monitoring: Detecting fatigue or distraction to enhance road safety.

In-car Experience: Adjusting vehicle settings based on occupant emotions.

Entertainment and Gaming

Adaptive Gameplay: Modifying game difficulty or storylines based on player emotions.

Film and TV Analytics: Analyzing audience reactions to content.

Human Resources

Interview Analysis: Assisting in candidate evaluation during job interviews.

Employee Wellbeing: Monitoring workplace stress levels and job satisfaction.

Updated on: October 6, 2024

Get to know more

AI

AI-Powered Learning Model for students

AI learning platform for students that customizes lessons, improving retention and completion rates.

AI

Document Automation for Logistics

AI-driven solution automates logistics documents, improving speed and accuracy.